AI is making the energy future sustainable, but its energy demand is enormous

Artificial Intelligence can enhance sustainability, but it is not inherently sustainable.

Whether Artificial Intelligence (AI) is good or bad depends not only on the value it can bring but also on its environmental consequences. Especially in the energy sector, expectations for AI applications are high. In recent years, energy and electricity markets have increasingly focused on artificial intelligence as a tool. AI has the ability to automate and optimize a range of energy-related processes, leading to more efficient and cost-effective processes, better energy management, and reduced negative impacts on the environment.

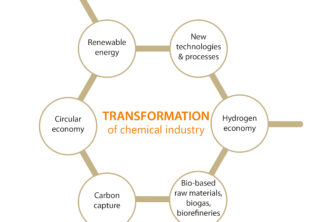

AI is considered a key element in achieving a sustainable, environmentally friendly, and efficient energy future. It should not be forgotten that its applications extend across all industries. AI has become a general-purpose technology.

When considering risks, we often think of job losses or data privacy rather than the ecological footprint. AI is not inherently sustainable, but it often aids in achieving sustainability goals more quickly. This is demonstrated by use cases in the energy sector.

Use cases in the energy sector

- AI models analyze extensive data to provide precise forecasts of renewable energy generation by integrating weather data, historical consumption patterns, and other factors. This optimizes the efficiency of energy facilities, improves planning, and facilitates the integration of renewable energy into the power grid. AI helps to mitigate the fluctuations of renewable energies, and battery storage is more efficiently utilized through optimized charging and discharging processes.

- AI supports the monitoring and control of smart grids through real-time data analysis. Intelligent algorithms can predict network disturbances, optimize load distribution, and take reactive measures to ensure reliable and efficient energy flow. AI enhances the security of energy infrastructures to protect the power grid from cyber-attacks.

- AI-powered predictive maintenance models enable proactive maintenance of energy generation facilities. These preventive measures reduce unplanned downtimes and extend the lifespan of the equipment, leading to more sustainable operations.

- AI-driven systems are revolutionizing the energy efficiency of residential and commercial buildings. By continuously analyzing user behavior in energy consumption, these systems learn and automatically adjust settings to optimize energy usage. This not only results in cost savings but also reduces the ecological footprint of buildings.

- AI enables personalized offerings for end customers by analyzing their consumption behavior. The AI provides proactive recommendations, from energy-saving tips to tailored tariff options or upgrades for energy-efficient devices. Tariff structures are dynamically optimized, and through continuous analysis of consumption behavior, the system suggests suitable tariffs that minimize costs and align with customer needs.

- AI-powered chatbots and virtual assistants optimize customer service by efficiently responding to inquiries. With pre-programmed responses to frequently asked questions, they are available 24/7, reducing wait times and enhancing their capabilities through machine learning. By analyzing customer conversations and feedback, they continuously learn, improving their accuracy and efficiency over time.

These are just a few examples. In the coming years, it is expected that use cases across the entire energy industry will significantly increase. Companies are developing their own GPT models, such as E.ON GTP, a generative artificial intelligence developed for the corporation. It aids in research, text processing, and serves as a virtual sparring partner for energy-related questions from employees.

For nearly two years now, the popular chatbot ChatGPT has been available. Within just two months, ChatGPT had around 100 million users. There are increasingly more tools in all variations. To train AI tools, they need to be fed with large amounts of data. This process is very energy-intensive. Each query to ChatGPT and others consumes between three and nine watt-hours of electricity. ChatGPT alone receives more than 195 million requests per day. Google processes nine billion search queries daily. A recent study by the VU Amsterdam School of Business and Economics estimates that by 2027, AI could consume as much electricity as Ireland. Additionally, there is a high water demand for cooling the data centers.

AI consumes a significant amount of energy for the learning process, and at the same time, the models are becoming larger to achieve higher accuracy. While companies worldwide are working to improve the efficiency of AI software, the demand for these tools continues to rise unabated. Researchers refer to a Jevons paradox, which means that an increase in efficiency in using a resource can lead to an increase in the overall consumption of that resource, rather than a decrease. This contradicts the intuitive assumption that higher efficiency automatically leads to lower resource consumption. We need data centers that operate 100 percent on renewable energy and where the waste heat from the servers is put to meaningful use. Everything is interconnected. AI models must take responsibility and set a good example by addressing their own energy and water footprint.

Doris Höflich, Market Intelligence Senior Expert

Sources:

- VU Amsterdam School of Business and Economics

- enviaM Gruppe

- E.ON

- Press databases

- The image was created with AI (Copilot).